[ad_1]

VentureBeat presents: AI Unleashed – An exclusive executive event for enterprise data leaders. Hear from top industry leaders on Nov 15. Reserve your free pass

A team of researchers from Adobe Research and Australian National University have developed a groundbreaking artificial intelligence (AI) model that can transform a single 2D image into a high-quality 3D model in just 5 seconds.

This breakthrough, detailed in their research paper LRM: Large Reconstruction Model for Single Image to 3D, could revolutionize industries such as gaming, animation, industrial design, augmented reality (AR), and virtual reality (VR).

“Imagine if we could instantly create a 3D shape from a single image of an arbitrary object. Broad applications in industrial design, animation, gaming, and AR/VR have strongly motivated relevant research in seeking a generic and efficient approach towards this long-standing goal,” the researchers wrote.

Training with massive datasets

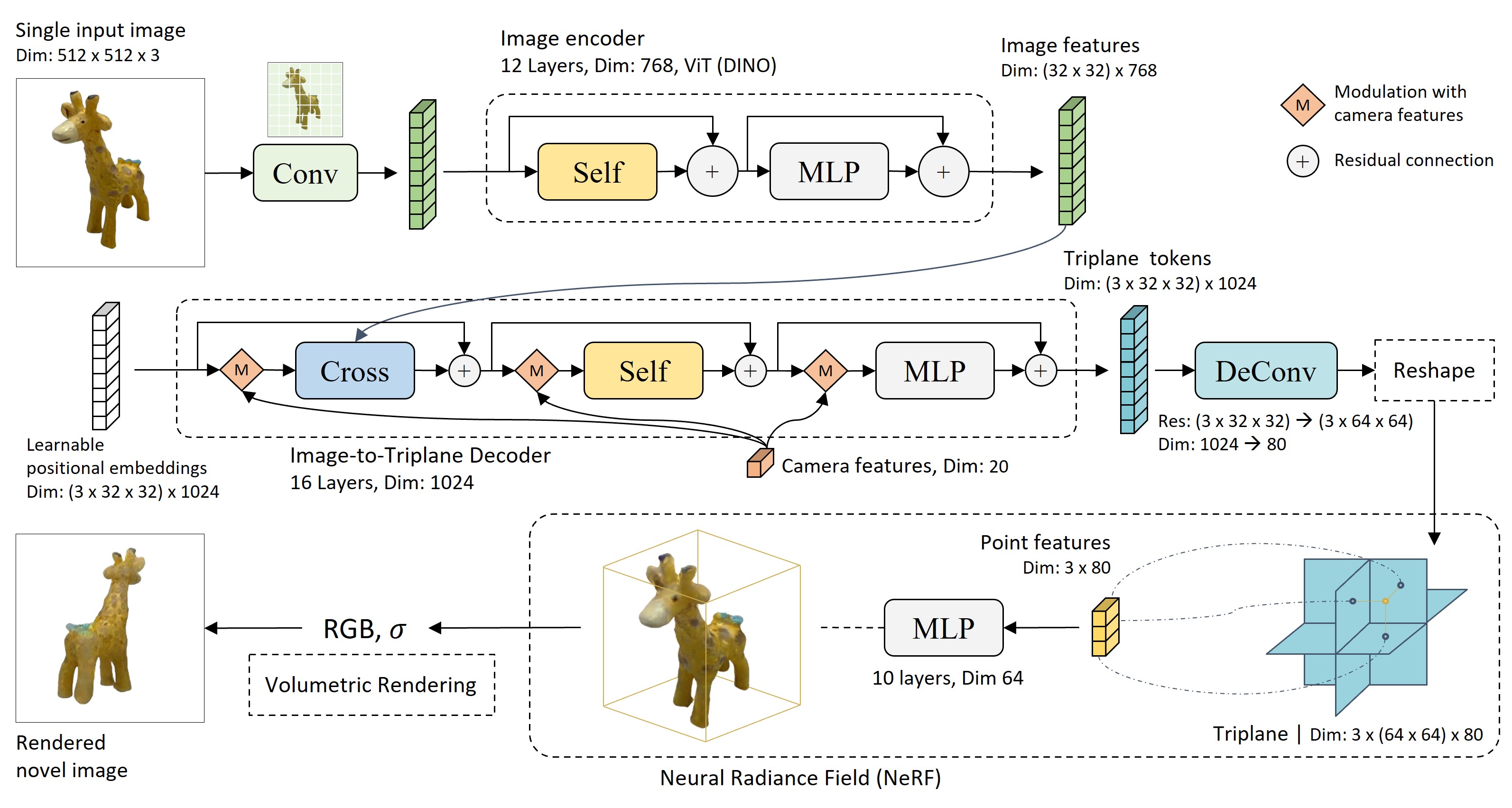

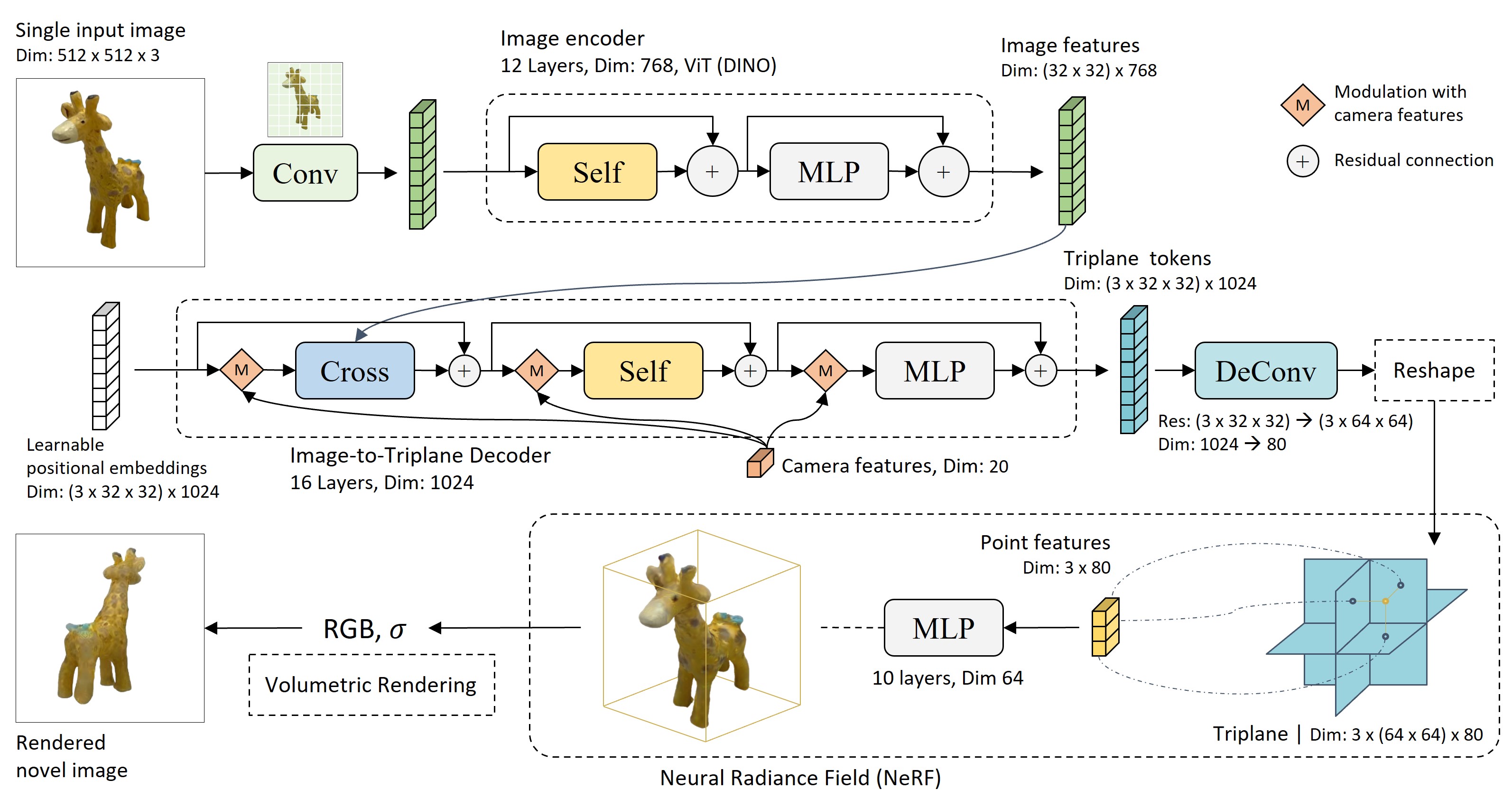

Unlike previous methods trained on small datasets in a category-specific fashion, LRM uses a highly scalable transformer-based neural network architecture with over 500 million parameters. It is trained on around 1 million 3D objects from the Objaverse and MVImgNet datasets in an end-to-end manner to predict a neural radiance field (NeRF) directly from the input image.

VB Event

AI Unleashed

Don’t miss out on AI Unleashed on November 15! This virtual event will showcase exclusive insights and best practices from data leaders including Albertsons, Intuit, and more.

“This combination of a high-capacity model and large-scale training data empowers our model to be highly generalizable and produce high-quality 3D reconstructions from various testing inputs including real-world in-the-wild captures and images from generative models,” the paper states.

The lead author, Yicong Hong, said LRM represents a breakthrough in single-image 3D reconstruction. “To the best of our knowledge, LRM is the first large-scale 3D reconstruction model; it contains more than 500 million learnable parameters, and it is trained on approximately one million 3D shapes and video data across diverse categories,” he said.

Experiments showed LRM can reconstruct high-fidelity 3D models from real-world images, as well as images created by AI generative models like DALL-E and Stable Diffusion. The system produces detailed geometry and preserves complex textures like wood grains.

Potential to transform industries

The LRM’s potential applications are vast and exciting, extending from practical uses in industry and design to entertainment and gaming. It could streamline the process of creating 3D models for video games or animations, reducing time and resource expenditure.

In industrial design, the model could expedite prototyping by creating accurate 3D models from 2D sketches. In AR/VR, the LRM could enhance user experiences by generating detailed 3D environments from 2D images in real-time.

Moreover, the LRM’s ability to work with “in-the-wild” captures opens up possibilities for user-generated content and democratization of 3D modeling. Users could potentially create high-quality 3D models from photographs taken with their smartphones, opening up a world of creative and commercial opportunities.

Blurry textures a problem, but method advances field

While promising, the researchers acknowledged LRM has limitations like blurry texture generation for occluded regions. But they said the work shows the promise of large transformer-based models trained on huge datasets to learn generalized 3D reconstruction capabilities.

“In the era of large-scale learning, we hope our idea can inspire future research to explore data-driven 3D large reconstruction models that generalize well to arbitrary in-the-wild images,” the paper concluded.

You can see more of the impressive capabilities of the LRM in action, with examples of high-fidelity 3D object meshes created from single images, on the team’s project page.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.

[ad_2]

Source link