[ad_1]

The edge of the network isn’t always where you find the most powerful computers. But it is the place where you can find the most ubiquitous technology.

The edge means things like smartphones, desktop PCs, laptops, tablets and other smart gadgets that operate on their own processors. They have internet access and may or may not connect to the cloud.

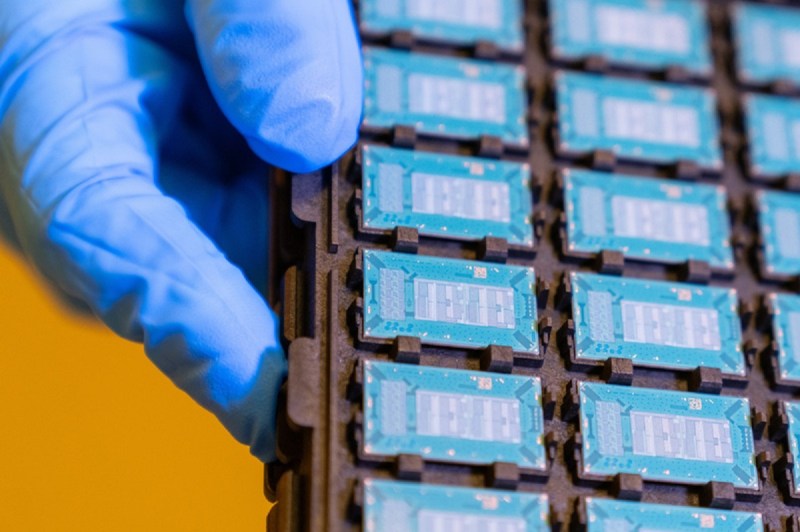

And so big companies like Intel are figuring out just how much technology we’re going to be able to put at networking’s edge. At the recent Intel Innovation 2023 conference in San Jose, California, I talked with Intel exec Sandra Rivera about this and more. We brought up the question of just how powerful AI will be at the edge and what that tech will do for us.

I also had a chance to talk about the edge with Pallavi Mahajan, the corporate vice president and general manager for NEX (networking and edge) software engineering at Intel. She’s been at the company for 15 months , with a focus on the new vision for networking and the edge. She previously worked at HP Enterprises driving strategy and execution for HPC software, workloads and the customer experience. She also spent 16 years at Juniper Networks.

Event

GamesBeat Next 2023

Join the GamesBeat community in San Francisco this October 23-24. You’ll hear from the brightest minds within the gaming industry on latest developments and their take on the future of gaming.

Mahajan said one of the things it will do is enable us to have a conversation with our desktop. We can ask it when was the last time I talked with someone, and it will search through our history of work and figure that out and give us an answer almost instantly.

Here’s an edited transcript of our interview.

VentureBeat: Thanks for talking with me.

Pallavi Mahajan: It’s actually really good to meet you, Dean. Before I get into the actual stuff, let me quickly step back and introduce myself, Pallavi Mahajan. I’m corporate vice president and GM for networking and software. I think I have been here at Intel for 15 months. It was just at a time when network edge was actually forming as a crew. Traditionally, we’ve had the space catered by many business units. The way the edge is growing and if you look into it, the whole distributed edge, everything outside of the public cloud, right up to your client devices – I’m a iPhone person; I love the iPhone.

About the new edge

If you think about it, there’s a donut that gets formed. Think about the center, the whole is the public cloud. Then whether you’re going all the way up to the telcos or all the way up to your industrial machines, or whether you’re looking into the devices that are their – the point of sale devices in your retail chain. You have that entire spectrum, which is what we call as the donut, is what Intel wants to focus in. This is why this business unit was created, which is called the Network and Edge group.

Again, Intel has had a lot of history working with the IoT G business that we used to have. We’ve been working with a lot of customers. We’ve gained a lot of insight. I think the opportunity –and Intel quickly realized that the opportunity to go about and consolidate all these businesses together is now. When you look at the edge, of course, you have the far edge. You have the new edge.

Then you have the telcos. The telcos are now wanting to get into the edge space. There’s a lot of connectivity that is needed in order to go out and connect all of that. That is exactly what Network and Edge (NEX) does. If you look at any of the low-end edge devices, whether you’re looking to the high-end edge devices, the connectivity, the NIC cards that go as part of it, the IPU-Fabric that goes as part of it, that’s all part of any exist charter.

The pandemic changes things

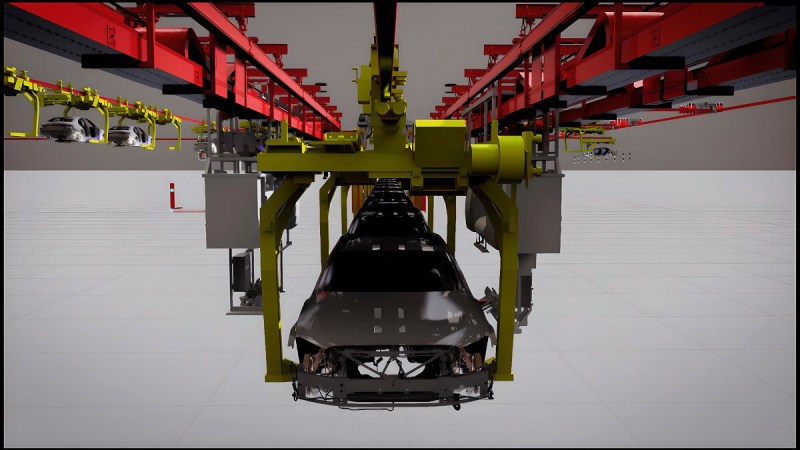

Again, I think the timing is everything. The pandemic, post the pandemic, we are seeing that more and more enterprises are looking into automating. Classic examples, I can take an example of an automobile manufacturer, very well-known automobile manufacturer. They always wanted to do auto welding defect, but they never could go out and figure out how to do it. With the pandemic happening and no one showing up in the factories, now you have to have these things automated.

Think about the retail stores, for example. I live in London. Prior to the pandemic, I hardly had – any of the retail stores had self-checkout. These days, I don’t even have to interact with anyone in the grocery store. I automatically go in and everything is self-checkout. All of this has led to a lot of fast tracking of automation. You saw our demo, whether it is in terms of the choice of fashion, you have AI now telling you what to wear and what is not going to look good on you, all of that stuff.

Everything, the Fit:match, the Fabletics experience that you saw, the remind experience that you saw where Dan talked about how he can actually go out and have his PC automatically generate an email to others. All of this, in very different wave forms, is enabled by the technology that we develop here at NEX. It was the vision [for those who started NEX]. They were very focused. They understood that, for us to play in the space – this is not just a hardware play. This is a platform play. When I say the platform, it means that we have to play with the hardware and we have to play with the software.

In Pat Gelsinger’s keynote, you saw Pat talk about Project Strata, which as Pat eloquently told that it is – you start with the onboarding. See, if you look into the edge, the edge is about scale. You have many devices. Then, all these devices are heterogeneous.

Whether you’re talking of different vendors, whether you’re talking about different generations, different software. It’s very heterogeneous. How do we make it easy to bring in this heterogeneous multi-scale set of nodes be easily managed and onboard? Our job is to make it easy for edge to grow and for enterprises to go out and invest more from an edge point of view.

If you look into Project Strata, of course, the most fundamental piece is the onboarding piece. Then on top of it is the orchestration piece. The edge is all about a lot of applications now, and the applications are very unique. If I am in a retail store, I will have an application that is doing the transaction, that the point of sale has to do. I will have another application which is doing my shelf management. I have an application which is doing my inventory management.

Orchestrating apps at the edge

How do I go about and orchestrate these applications? More and more AI is in all these applications. Again, retail as an example, when I walk in, there’s a camera that is watching me and is watching my body pattern, and knows that is there a risk of theft or not a risk of theft? Then when I’m checking out, the self-checkout stuff, again, there’s a camera with AI incorporated in it, which is providing on the thing about hey, did I pick up lemons or did I end up picking oranges?

Again, as you look into it, more and more AI getting into the space. That’s the orchestration piece that comes in. Then on top of all of this, every enterprise wants to get more and more insights. This is where the observability piece comes in, a lot of data getting generated. Edge is all about data. In fact, Pat talked about it, the three laws. Laws of physics, which means a lot of data is going to generate – get generated in the edge. Law of economics, which is businesses quickly want to automate. Then the law of physics – sorry, the law of lag, which is governments don’t want the data to move out of the country because of whatever privacy insecurities. That’s all driving the growth of edge. With Project Strata, we want now go about – Intel always had a good hardware portfolio.

Now we’re building up a layer on top of it so that we go out and make a play from a platform point of view. Honestly, when we go and talk to our customers, they’re not just looking for the – they don’t want to go out and make a soup by buying the ingredients from many different vendors. They want a solution. Enterprises work like a solution which actually works. They want something to work in like two weeks, three weeks. That’s the platform play that Intel is in.

The edge wins on privacy

VentureBeat: Okay, I have a bunch of questions. I guess that it feels like privacy is the edge’s best friend.

Mahajan: Yes, security, scale, heterogeneity, if I am an IT leader in the edge, these are things that actually would keep me up in the night.

VentureBeat: Do you think that overcomes other – some other forces maybe that were saying everything could be in the cloud? I guess we’re going to wind up with a balance of some things in the cloud, some things in the edge.

Mahajan: Yeah, exactly, in fact, this is huge debate. I think people like to say that, hey, the pendulum has swung. Of course, what was it? A couple of decades back when everything was moving over to the cloud. Now with a lot of interest in the edge, now there’s a line of thought of people who say that now the pendulum is swinging towards the edge. I actually think it’s somewhere in the middle. Generative AI is a perfect example of how this is going to balance the pendulum swing.

I’m a huge believer, and this is a space that I live and breathe all the time. With generative AI, we are going to have more and more of the large models deployed in the cloud. Then the small models, they will be on the edge, or even on our laptops. Now, when that happens, you need a constant introduction between the edge and the cloud. Making a comment that no, everything will run on the edge, I don’t think that’s going to happen.

This is a space which will innovate really fast. You can already see. The day OpenAI came up in the first announcement. Until now, there are almost about 120 new large language models that have been announced. That space is going to innovate faster. I think it’s going to be a hybrid AI play where the model is going to be sitting in the cloud and part of the model is actually going to get inferred on the edge.

If you think about it from an enterprise point of view, that is what they would want to do. Hey, I don’t want to go out and invest in more and more infrastructure if I have existing infrastructure that you can actually go about and use to get the inferencing going, then do that. OpenVINO, as Pat was talking about, is exactly the software layer that enables you to now do this hybrid AI play.

Layers of security

VentureBeat: Do you think security is going to work better in either the cloud or the edge? If it does work better in one side, then it seems like that’s where the data should be.

Mahajan: Yeah, I think definitely, when it comes to it – when you’re talking of the cloud, you have – you don’t have to worry about security in each of the data – in each of your servers because then you can just – as long as your perimeter security is there, then you’re kind of assured that you have the right thing. In the edge, the problem is every device, you need to make sure that you’re secure.

Especially with AI, if I am now deploying my models over on these edge devices, model is like proprietary data. It’s my intellectual property. I want to make sure it’s very secure. This is where, when we talk about Project Strata, there are multiple layers of. Security is built into every single layer. How do you onboard the device? How do you build in a trusted route of trust within the device? To all the way up until you have your workloads running, how do you know that this is a workload, this is a valid workload; there’s not a malicious workload which is now running on this device?

The ability with Project Amber, bringing in and making sure that we have a secure enclave where our models are predicted. I think this is – the lack of solutions in this space was a reason why enterprises were hesitant in investing in edge. Now with all these solutions, and the fact that they want to automate more and more, there is going to be this huge growth in the end.

VentureBeat: It does make sense that – talking about hardware and software investments together. I did wonder why Intel hasn’t really come forward on something that Nvidia has been pushing a lot, which is the metaverse and Nvidia’s Omniverse stack really has enabled a whole lot of progress on that. Then they’re getting behind universal scene description standard as well. Intel has been very silent on all of that. I felt like the Metaverse would be something that hey, we’re going to sell a lot of servers. Maybe we should get in on that.

Mahajan: Yeah, our approach here in Intel is to go in with encouraging an open ecosystem, which means that today, you could use something which is an Intel technology. Tomorrow, if you want to bring something else, you could go ahead and do that. I think your question about metaverse – there’s an equal end of this that we call a SceneScape, which is more about situational awareness, digital twins.

As part of Project Strata, what we are doing is we have a platform. It starts with the foundational hardware, but it does not need to be in the hardware. You saw how we are working very closely with our entire hardware ecosystem to make sure that the software that we build on top of it has heterogeneity support.

The base, you start with the foundational hardware. Then on top of it, you have the infrastructural layer. The infrastructural layer is all the fleet management – oh, awesome, thank you so much. All the fleet management, the security pieces that you talked about. Then on top of it is the AI application layer. OpenVINO is a part of it, but it has a lot more. Again, to your point about Nvidia, if I pick up an Nvidia box, I get the whole stack.

Proprietary or open?

VentureBeat: Mm-hmm, it’s the proprietary end-to-end-part.

Mahajan: Yes, now what we are doing here is – Intel’s approach traditionally has been that we will give you tools, but we are not providing you the interim solution. This is a change that we want to bring, especially from an edge point of view because our end persona, which is the enterprise, does not have that amount of savvy developers. Now you have an AI application there which is giving you a low code, no code environment. You have a box to which you can actually program all the data that is coming in from many devices.

How do you go about process that, quickly get your models to be trained, to be – the inferencing to happen. Then on top of it are the applications. One of the applications is a situational awareness application that you’re talking about, which is exactly what Nvidia’s metaverse is. Having been in this industry, I truly believe that the merit of this is that the stack is completely decomposable. I’m not tied to a certain software stack. Tomorrow, if I feel like hey, I need to bring in – if Arm has a better model optimization layer, I can bring that layer on top of it. I don’t have to feel like it’s one stack that I have to work with.

VentureBeat: I do think that there’s a fair amount of other activity outside of Nvidia, like the Open Metaverse Foundation. The effort to promote USD as a standard is also not necessarily tied to Nvidia hardware as well. It feels like Intel and AMD could both be shouting out loudly that the open Metaverse is actually what we support, and you guys are not. Nvidia is actually the one saying that we are when they’re only partially supporting it.

Mahajan: Yeah, I’m going to look up the open metaverse foundation. I was talking about edge and why the edge is unique. Especially when we talk about AI at the edge, AI is – at the edge, AI is everything about inferencing. Enterprises, they don’t want to spend the time in training models. They bring in existing models. Then they go up and just customize it. The whole idea is, how do I quickly get the model? Now get me the business insights.

It is exactly the AI and application layer that I was talking about. It has tech that enables you to bring in some existing model, quickly fine tune it with just two, three clicks, get going and then start getting – to the retail example, am I buying a lemon or am I buying an orange?

Smartphones vs PCs

VentureBeat: Arm went public. They talked about democratizing AI through billions of smartphones. A lot of Apple’s hardware already has neural engines built into them as well. I wondered, what’s the additional advantage of having the AI PC democratized as well, given that we’re also in a smartphone world?

Mahajan: Yeah, I actually think, to me, when we think of AI we always think of the cloud. What’s driving all the demand for AI? It is all of these smartphone devices. It is our laptops. As Pat talked about it, we all – the applications that we are creating, whether it is for Remind or IO, which is a super application that now makes sure that I’m very organized. These applications are the ones that are actually driving AI.

I look at it as, traditionally, when you start to think of AI, you think of cloud and then pushing it over. We at Intel are now more and more seeing this, that the client at the edge is pushing the demand of AI over to the cloud. We think you could say the same thing one way or the other, but I think it gives you a very different perspective.

To your question, yes, you need to get your smart devices democratized AI, which is where Arm was doing that, by using OpenVINO as the layer for going about out, doing model optimizations, compression and all of that. Intel, we are fairly committed. Even the AIPC example that you saw, it’s the same software that runs across the AIPC. It’s the same software that runs across the edge when it comes to your AI model, inferencing optimization, all of that stuff.

VentureBeat: There’s some more interesting examples I wanted to ask you about. I read a lot about games. There’s been a lot of talk about making the AI smarter for game characters. They were just the characters that might give you three or four answers and that’s it in a video game, and then they aren’t smart enough to talk to for three hours or something like that. They just repeat what they’ve been told to tell the player.

The large language models, if you plug them into these characters, then you get something that’s smart. Then you also have a lot of costs associated –

Mahajan: And delay in the experience.

VentureBeat: Yeah, it could be a delay, but also $1 a day for a character maybe, $365 per year for a video game that might sell for $70. The cost of that seems out of control. Then you can limit that, I guess. Say, okay, well, it doesn’t have to access the entire language model.

Mahajan: Exactly.

VentureBeat: It just has to access whatever it needs to be evidently smart.

Mahajan: Exactly, this is exactly what we call as hybrid AI.

VentureBeat: Then the question I have is, if you narrow it down, at some point does it not become smart? Does it become not really AI, I guess? Something that can anticipate you and then be ready to give you something that maybe you weren’t expecting.

Mahajan: Yeah, my eyes are shining because this is a space that I – it excites me the most. This is a space that I’m actually dealing with. The industry right now – it all started with we have a large language model that is going to be hostile and OpenAI had to have an entire Azure HPC data center dedicated to do that. By the way, prior to joining Intel, I was with HPE, with the HPE business of HP. I knew exactly the scale of the data centers that all of these companies were building, the complexities that come in and the cost that it brings in. Very soon, what we started to see is a lot of technology innovation about, how do we get into this whole hybrid AI space? We, Intel, ended up participating into it.

In fact, one of the things that is happening is speculative inferencing. The speculative inferencing element is you pick a large language model. There is a teacher student model where you’ve taught the student. Think about it, that the student has a certain bit of knowledge. You spend some time training the student. Then, if there’s a question asked to the student that the student does not know an answer for, only then would it go to the cloud. Only then does it go to the teacher to ask the question. When the teacher gives you an instruction, you put it in your memory and will learn.

Speculative inferencing is just one of the ways that you can actually go in and work on hybrid AI. The other way you can go and work on hybrid AI is – think about it. There’s a lot of information that is there. You figured out that that large model can be broken into multiple layers. You’ll distribute that layer. To your gaming example, if you have three laptops with you or you have three servers in your data center, you distribute that across. That big model gets broken into three pieces, distributed across those three servers. You don’t even have to go and talk to the cloud now.

The demo Remind.ai demo that Pat did, this is Dan coming in. We talked about how you can record everything that happens on your laptop. It is not much common knowledge, but Dan from Remind actually started working on it just five days back. Dan ended up meeting Suchin in a forum. He walked Suchin about what he’s doing. Everything that he was doing was using cloud and he was using a Mac. Suchin was like, “No, listen, there’s a lot of awesome stuff that you could go out and use on Intel.”

In five days, he’s now using an Intel laptop. He does not have to go to GPT-4 all the time. He can choose to go out and run the summarization on his laptop. If he wants, he can also do the partial fees of running part of the summarization on this laptop and part of it on the cloud. I actually believe that this is a space where there’ll be a lot of innovation.

VentureBeat: I saw Sachin Katti (SVP for NEX) last night. He was saying that yeah, maybe within a couple of years, we have this service for ourselves where we can basically get that answer. I think also Pat mentioned how he could ask the AI, “When did I last talk to this person? What did we talk about, what was” – etcetera, and then that part can also –that seems like recall, which is not that smart.

When you’re bringing in intelligence into that and it’s anticipating something, is that what you’re expecting to be part of that? The AI is going to be smart in searching through our stuff?

Mahajan: Yeah, exactly.

VentureBeat: That’s interesting. I think, also, what can go right about that and what can go wrong?

Mahajan: Yes, lot of awkward questions about it. I think, as long as the data stays in your laptop – I think this is where the hybrid AI thing comes in. I don’t need to go in now with hybrid AI. We don’t need to send everything over to GPT-4. I can process it all locally. When we started, five days back when I started talking with Dan, Dan was like, “Bingo, if I can make this happen, then – right now when he goes and talks to customers, they’re very worried about data privacy. I would be too, because I don’t want someone to be recording my laptop and all that information to be going over the internet. Now you don’t even need to do that. You saw, he just shut off his wi-fi and everything was getting summarized in his laptop.

GamesBeat’s creed when covering the game industry is “where passion meets business.” What does this mean? We want to tell you how the news matters to you — not just as a decision-maker at a game studio, but also as a fan of games. Whether you read our articles, listen to our podcasts, or watch our videos, GamesBeat will help you learn about the industry and enjoy engaging with it. Discover our Briefings.

[ad_2]

Source link