[ad_1]

Head over to our on-demand library to view sessions from VB Transform 2023. Register Here

Today, New York-based Datadog, which delivers cloud observability for enterprise applications and infrastructure, expanded its core platform with new capabilities.

At its annual DASH conference, the company announced Bits, a novel generative AI assistant to help engineers resolve application issues in real-time, as well as an end-to-end solution for monitoring the behavior of large language models (LLMs).

The offerings, particularly the new AI assistant, are aimed at simplifying observability for enterprise teams. However, they are not generally available just yet. Datadog is testing the capabilities in beta with a limited number of customers and will bring them to general accessibility at a later stage.

When it comes to monitoring applications and infrastructure, teams have to do a lot of grunt work – right from detecting and triaging an issue to remediation and prevention. Even with observability tools in the loop, this process requires sifting through massive volumes of data, documentation and conversations from disparate systems. This can take up hours, sometimes even days.

Event

VB Transform 2023 On-Demand

Did you miss a session from VB Transform 2023? Register to access the on-demand library for all of our featured sessions.

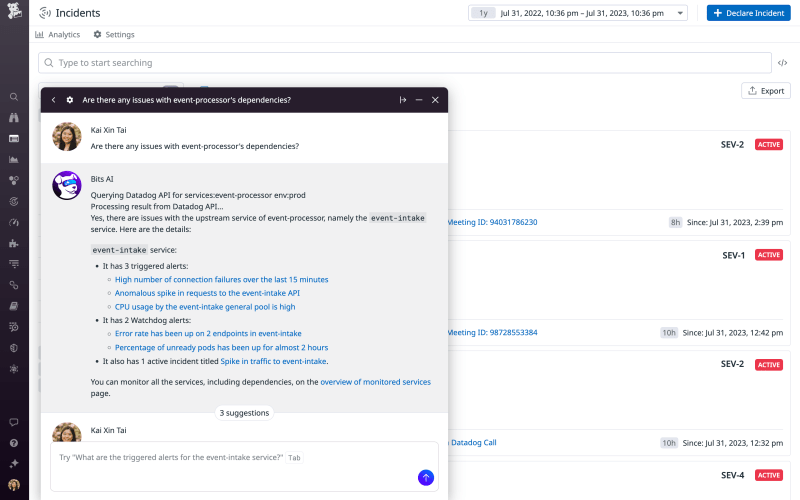

With the new Bits AI, Datadog is addressing this challenge by giving teams a helper that can assist with end-to-end incident management while responding to natural language commands. Accessible via chat within the company platform, Bits learns from customers’ data — covering everything from logs, metrics, traces and real-user transactions to sources of institutional knowledge like Confluence pages, internal documentation or Slack conversations — and uses that information to quickly provide answers about issues while troubleshooting or remediation steps in conversational.

This ultimately improves the workflow of users and reduces the time required to fix the problem at hand.

“LLMs are very good at interpreting and generating natural language, but presently they are bad at things like analyzing time-series data, and are often limited by context windows, which impacts how well they can deal with billions of lines of logging output,” Michael Gerstenhaber, VP of product at Datadog, told VentureBeat. “Bits AI does not use any one technology but blends statistical analysis and machine learning that we’ve been investing in for years with LLM models in order to analyze data, predict the behavior of systems, interpret that analysis and generate responses.”

Datadog uses OpenAI’s LLMs to power Bits’ capabilities. The assistant can coordinate a response by assembling on-call teams in Slack and keeping all stakeholders informed with automated status updates. And, if the problem is at the code level, it provides a concise explanation of the error with a suggested code fix that could be applied with a few clicks and a unit test to validate that fix.

Notably, Datadog’s competitor New Relic has also debuted a similar AI assistant called Grok. It too uses a simple chat interface to help teams keep an eye on and fix software issues, among other things.

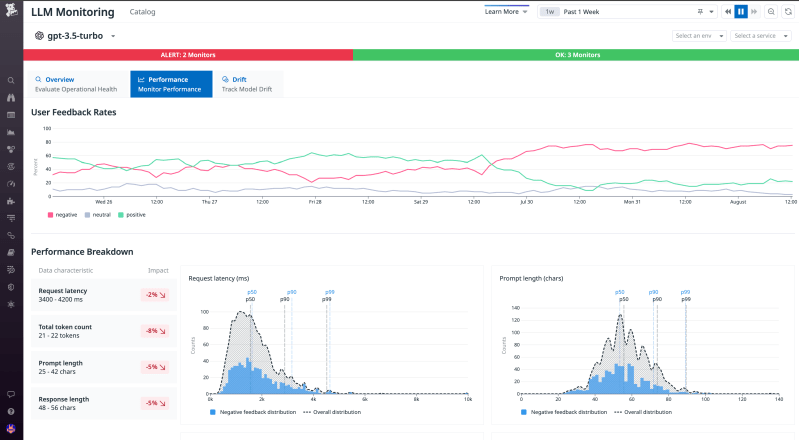

Along with Bits AI, Datadog also expanded its platform with an end-to-end solution for LLM observability. This offering stitches together data from gen AI applications, models and various integrations to help engineers quickly detect and resolve problems.

As the company explained, the tool can monitor and alert about model usage, costs and API performance. Plus, it can analyze the behavior of the model and detect instances of hallucinations and drift based on different data characteristics, such as prompt and response lengths, API latencies and token counts.

While Gerstenhaber declined to share the number of enterprises using LLM Observability, he did note that the offering brings together what usually are two separate teams: the app developers and ML engineers. This allows them to collaborate on operational and model performance issues such as latency delays, cost spikes and model performance degradations.

That said, even here, the offering has competition. New Relic and Arize AI both are working in the same direction and have launched integrations and tools aimed at making running and maintaining LLMs easier.

Moving ahead, monitoring solutions like these are expected to be in demand, given the meteoric rise of LLMs within enterprises. Most companies today have either started using or are planning to use the tools (most prominently those from OpenAI) to accelerate key business functions, such as querying their data stack to optimizing customer service.

Datadog’s DASH conference runs through today.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.

[ad_2]

Source link